Numbers

Numbers are weird.

Here’s the number 10 in this sentence. My brain narrator reads that as “ten” in my secret voice. It’s probably ten. But it could also be 2 or 16. It depends!

Most1 humans count using a base 10 system: we have ten different glyphs to represent a single digit, and have to string together multiple glyphs to express anything higher than that. Binary is a base 2 system, numbers larger than 0 or 1 require additional symbols. There are several other common numeral systems used in software:

- Octal (base 8): most commonly seen with file permissions in Unix-y operating systems

- Hexadecimal (base 16): includes

ABCDEFas additional glyphs, often used for color codes (e.g.#FF0000for red) - Base 32 and Base 64: used to encode data for convenient2 and safe3 transfer between systems

The number 10 is certainly “ten” in the decimal system, but it’s also legal notation in both the binary and hexadecimal systems:

If you pull the slider to the right you’ll see how binary and hexadecimal increment compared to decimal: 10 in binary is 2 in decimal, and 10 in hex is 16 in decimal.

Encoding/converting

Here’s some even more confusing trivia: the number 10 (decimal) can be expressed in binary as 1010. Except for this 10 on the screen, which is actually (well, probably4) 0011000100110000.

The number 10 is not the same as the two characters "1" and "0". That binary representation is comprised of two bytes (2 × 8 bits):

00110001 = 4900110000 = 48

The numbers 49 and 48 refer to two glyphs on a character encoding table. Those glyphs are not numbers, they are food for fonts.

Numbers have to be converted to or from text. There’s a function called parseInt() in JavaScript that tries to convert text into a “real” number:

const number = parseInt("10")I’ve used this for a shamefully long time before ever really thinking about what it does. That trusty One Weird Trick™ that pops the quotes off of string-numbers and turns them into, uh, number-numbers.

But parseInt("10") doesn’t just mysteriously pop the quotes off from that "10". It does mathemagics:

- Iterate over each character in the string (

"1", "0"), - map each character code with its numeric counterpart (

49 -> 1,48 -> 0), and then - do whatever it does to end up with the resulting quoteless

10(because surprise it can parse “numbers” in many different numeral systems5, not just decimal).

Also there’s different sizes

Many programming languages offer a variety of different flavors data types to use for hanging on to numbers. They can have different sizes (measured in bits), include or prohibit fractions, signed or unsigned6, etc. All of these parameters affect the minimum and maximum values that can be stored for a number.

Every number horsing around inside a computer has a limit, they can not be infinite7, even if that limit is physical disk space. An 8-bit unsigned integer has 256 (2 ^ 8 = 256) possible combinations, so its maximum value is 255. Here’s a few more examples of unsigned integers and their upper limits:

| Bits | Maximum value |

|---|---|

| 8 | 255 |

| 16 | 65,535 ~65 thousand |

| 32 | 4,294,967,295 ~4 billion |

| 64 | 18,446,744,073,709,552,000 ~18 quintillion |

These are fairly common number types found in many programming languages. Some even have variable types dependent on platform architecture, like usize in Rust.

JavaScript, on the other hand, has two whole number types to choose from:

| Name | Bits | Maximum value |

|---|---|---|

number | 64 | 9,007,199,254,740,991 ~9 quadrillion, sometimes |

BigInt | 1073741824 | Huge ~Jesus Christ |

All “plain” numbers in JavaScript are 64-bit floating point values, which infers the following non-negotiable terms:

- These numbers are not integers. Even if a number doesn’t include fractional digits, some bits are reserved to do so, and there’s a threshold after which large numbers degrade in accuracy.

- They’re signed; one of those bits is reserved to indicate whether it’s negative or not.

Then there’s BigInt, in case you’re dealing with numbers in the realm of whatillions. If you’re not, don’t use them. They’re terribly slow.

In Conclusion

Numbers are weird.

Plot Twist

Of course I’m not done with BigInt. This whole page is an excuse to share the absurdity of BigInt.

Pinning up that table with that ridiculous number — you know which one — and then leaving it alone would be a crime.

The language specification doesn’t seem to define a size limit on these; that insane bit resolution is a soft limit in the v8 source code:

static const int kMaxLengthBits = 1 << 30; // ~1 billion.That is over one billion bits.

The reason I didn’t embed the maximum value for these in the table above is because I either don’t know how, am confused about how to actually compute it, or because I feel guilty enough for the misleading number limit. Or all of the above. There’s also a few remaining unknowns around the thing that would require more than a Saturday to understand.

I still wanted to see what a theoretical uint1073741824 would look like though, as inconceivable as it is predetermined to be. I tried to compute this using a combination of BigInt and bignumber.js in Deno:

import BigNumber from 'npm:bignumber.js'

BigNumber.config({

RANGE: [ -1, 1e9 ],

DECIMAL_PLACES: 0,

EXPONENTIAL_AT: 1e9,

})

const bits = 1n << 30n

// Normally `(2 ** bits) - 1` would produce the number I want, but since

// that would exceed the limitations of BigInt on its own I split this up into

// two steps. Note the difference in parentheses here:

const n = 2n ** (bits - 1n)

// Now hand off to bignumber for the final step: `n * 2 - 1`

const result = new BigNumber(n)

.times(2)

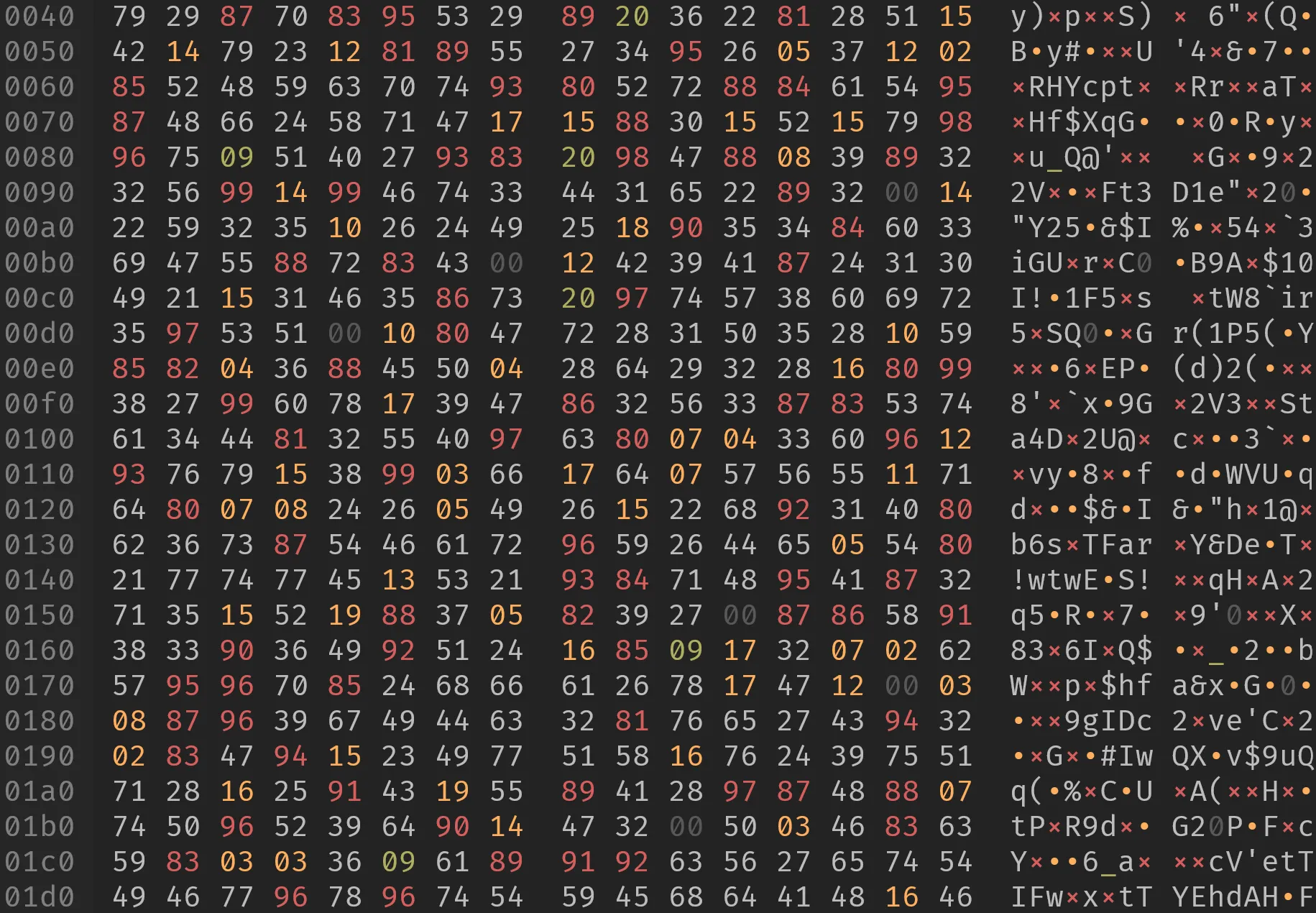

.minus(1)…and the result:

That is either the maximum value one can store in an imaginary uint1073741824, or it is a random unrelated number. It’s hard to tell, I can’t realistically hand-check my work here.

The number is so large that the uncompressed text costs over 300 MB of disk space. To embed it on this page I squeezed every digit into a single byte to effectively halve the file size, then load the data from that file in progressive “chunks” by sending a “Range” header8 with the request. Each chunk is stitched back together before being injected onto the page:

const chunk = await getNextChunk()

let output = ''

for (const byte of chunk.bytes) {

// One byte contains two digits between 0 and 9, so we split them here:

// v-- "a"

// 0xFF

// ^-- "b"

const a = byte & 0x0F

const b = (byte & 0xF0) >> 4

// Now map each number to their character code counterparts (0 -> "0"). Zero

// starts at 48, so we can simply add 48 to these:

out += String.fromCharCode(48 + a)

out += String.fromCharCode(48 + b)

}

return outKeep your eyes peeled for my next boring post where I waste several weekends obsessing over obscure control characters.

Footnotes

-

There’s actually a few other interesting ones, such as the Kaktovik system in base 20. ↩

-

32 and 64 are what we call “nice computer numbers” in this business. They divide cleanly when split into octets. ↩

-

I used the word “safe” here in terms of data validity, such as storing text that contains emojis in a database that doesn’t support emojis. ↩

-

Dependent on encoding — the “front page” of most modern character sets share the same familiar ASCII-ish table map. I think. ↩

-

Base 2 (binary) up to base 36 (the whole dang English alphabet) ↩

-

“Unsigned” numbers can only be positive and start at zero, whereas “signed” numbers may be negative. Signed numbers reserve one bit to indicate whether a number is positive or negative. ↩

-

The

Infinitysymbol in JavaScript doesn’t actually mean infinity, it means it gave up. ↩ -

Range headers can be used to request partial data from a remote resource. I’m using byte ranges here:

Range: bytes=<from>-<to>. This is also the first time I’ve ever used this! ↩