Re-Mixer

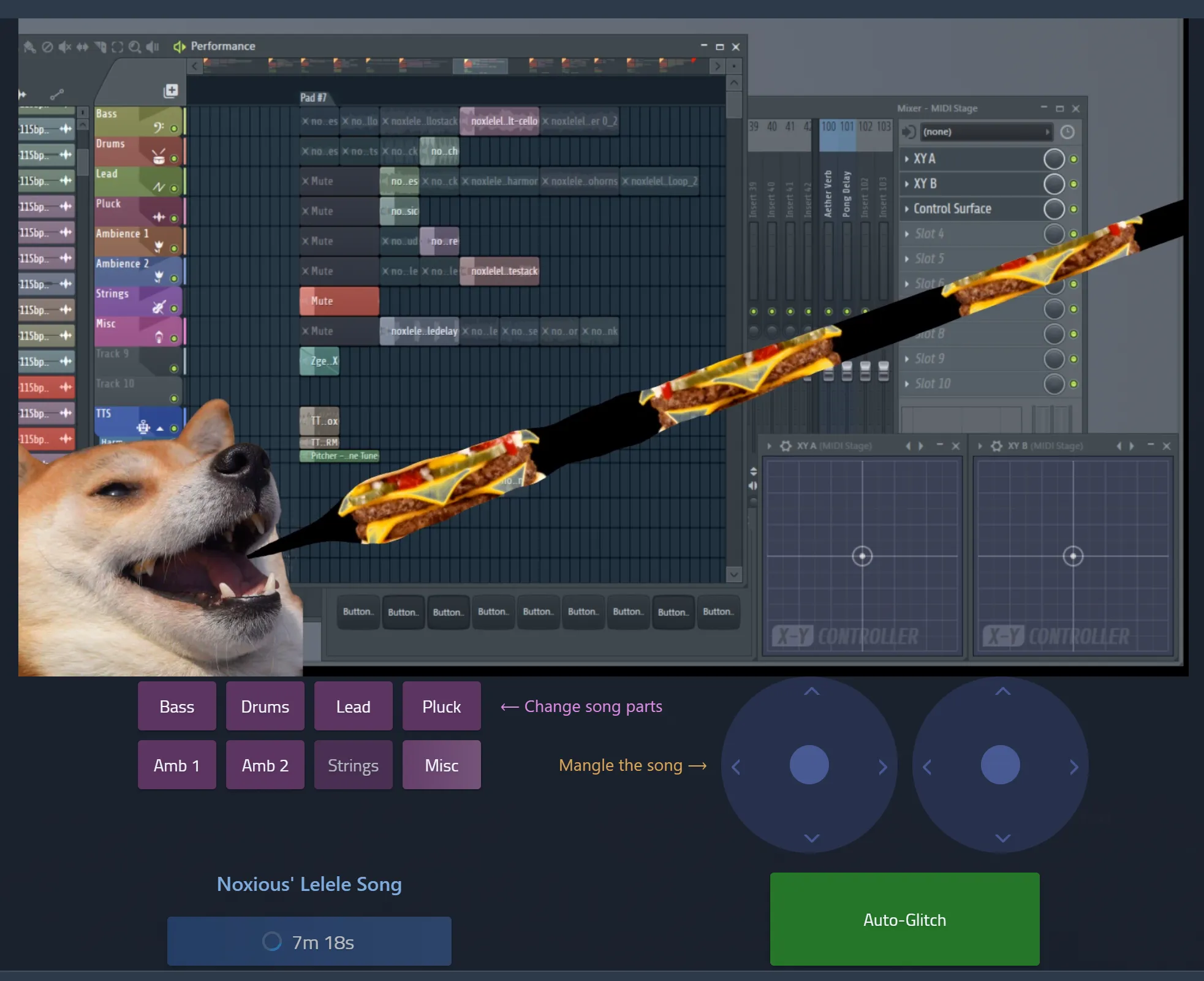

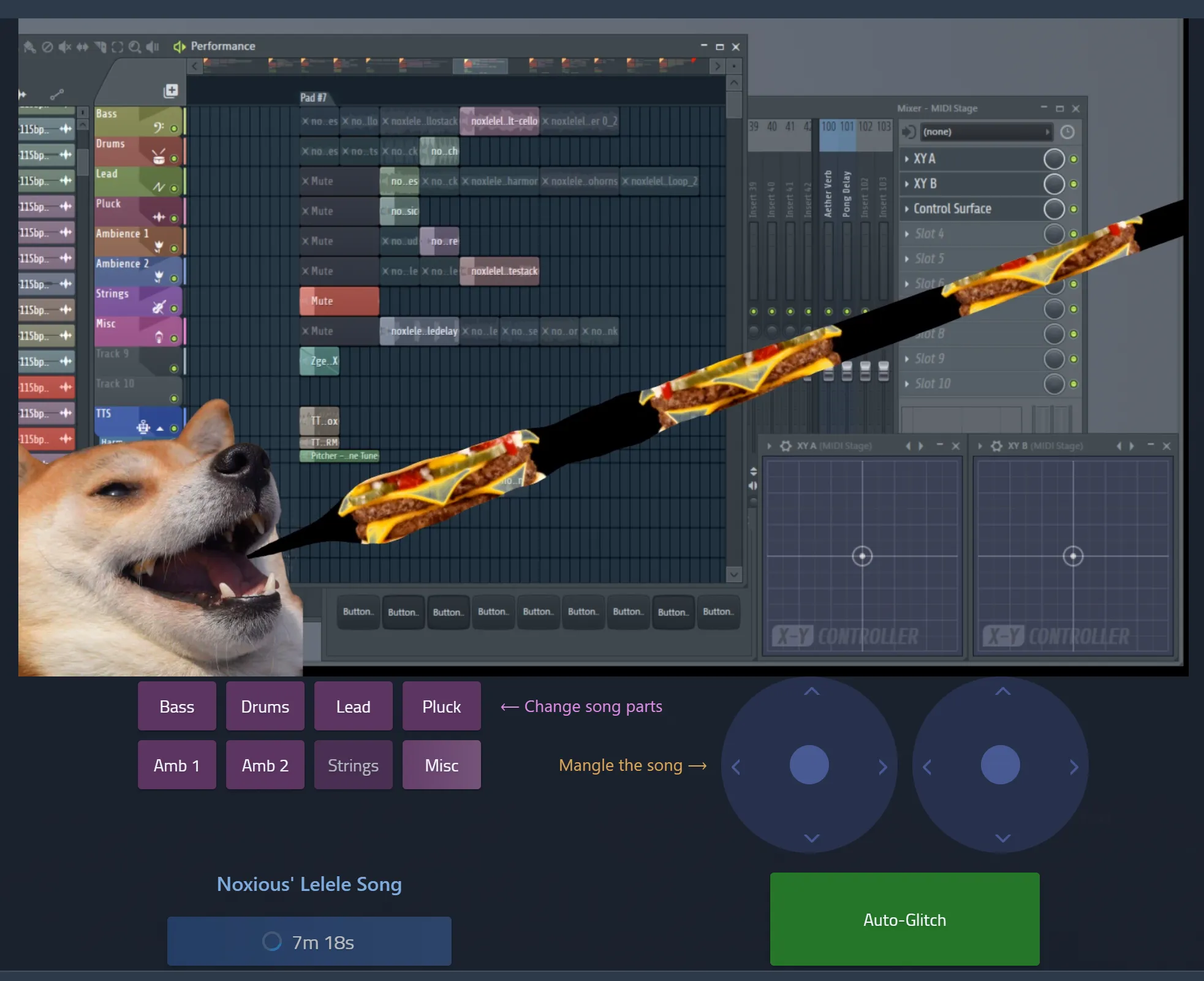

I built a variant of my crowd-sourced music bot thing that works on Mixer, a video streaming platform. Mixer provides an incredibly powerful API for implementing interactive controls to accompany a stream, which I used to set up a fairly simple control layout for viewers to directly affect a playlist that I had created - essentially a live show that internet strangers could perform themselves!

I disassembled a bunch of songs that I’ve written into layered patterns: drum loops, lead patterns, bass, etc. I arranged these into a sequence of songs in FL Studio, and by enabling its performance mode I could use incoming noteon MIDI signals to increment patterns during playback - these signals are triggered by a set of virtual buttons on the page for my stream channel. I also set up two virtual joysticks to manipulate X/Y pads that are tied to eight glitchy effect processors, and another button to progress the playlist to the next song.

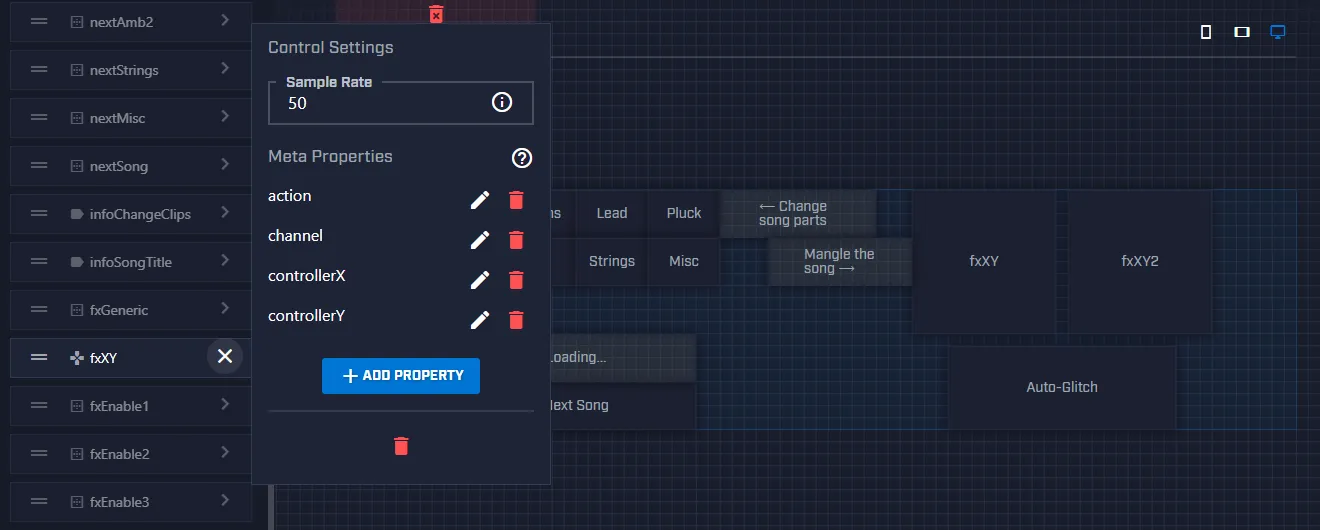

I designed the control layout using Mixer’s editor. Each control has custom meta properties attached to them that define their functionality in my app. Every control has an action meta property with a string value - these roughly equate to functions in the app, or more accurately event listener namespaces. Here’s a screenshot of the editor with one of the joysticks’ settings opened:

The first joystick’s meta properties look like this:

{

"action": "fxXY", // Event name to listen to in the app

"channel": 1, // The MIDI channel it will talk to

"controllerX": 8, // MIDI CC parameter for the X axis

"controllerY": 9 // MIDI CC parameter for the Y axis

}The app is comprised of three core components: chat, game, and MIDI. These are instantiated in an app.js entrypoint and rigged to perform special functions.

The chat component watches the chatroom during the stream, reading participants’ chat messages out loud (text-to-speech output fed into a pitch correction VST (also, yes, this is extremely dangerous)). It also allows me to issue commands via chat:

// Skip to a specific song in the playlist, only available to moderators

chatbot.registerCommand('load', ['Owner', 'Mod'], (msg, songName) => {

loadSong(songName)

})

// Modify app settings (disable text-to-speech, change cooldown on buttons)

chatbot.registerCommand('set', ['Owner', 'Mod'], handleChatUpdateConfig)

// Start or stop the app

chatbot.registerCommand('start', 'Owner', () => mixerbot.start())

chatbot.registerCommand('stop', 'Owner', () => mixerbot.stop())

// Relay chat messages to the text-to-speech service

chatbot.on('message', msg => sendChatToVoice(msg))The “game” component interfaces with Mixer’s interactive API. I’ve designed it so that I can listen for input events from Mixer and respond however I’d like by referencing that custom action meta property on each control:

// All of the pattern-changing buttons

mixerbot.on('nextClip', payload => handleNextClipButton(payload))

// The two virtual joysticks

mixerbot.on('fxXY', payload => handleJoystickMove(payload))

// The "next song" button

mixerbot.on('nextSong', payload => payload.value && loadSong('next'))Lastly, the MIDI bot relays controls to the Raspberry Pi on my desk. Here’s a part of the event handler function for the virtual joysticks:

/**

* Reacts to an input event from the virtual joysticks.

* `payload` is a pile of event data from Mixer, including

* the value of both the X and Y axes. These are translated

* to a 0-127 range to meet the expected resolution of a MIDI

* CC value and sent as two separate parameters to my host

* computer.

*/

function handleJoystickMove (payload) {

const { controllerX, controllerY, channel } = payload.meta

let [ x, y ] = payload.value

x = Math.round(x * 127)

y = Math.round(y * 127)

midibot.send({

type: 'cc',

quantize: false,

value: {

controller: controllerX,

channel,

value: x

}

})

midibot.send({

type: 'cc',

quantize: false,

value: {

controller: controllerY,

channel,

value: y

}

})

// ...

}I’ve also started working on another component that creates an overlay for the video stream; capturing my DAW window isn’t the nicest presentation.